https://github.com/graviton-studio/logos

Toni and I have been building a run little side project.

Our goal was to understand how to build MCP servers and integrate with various tools, and to share a template to help other people get started and easily run this themselves. Here is the deep dive on how this system works. There are a few key workflows within Logos that one could follow. First, we have the agent builder. The idea is: you put in a prompt and it produces an agent that can specifically carry out a series of tasks. For example, let's say we’re a VC that wants to deploy an agent that would monitor portfolio companies' hiring needs against network connections in Airtable and then make introductions in Gmail. We would take this query, and the result would be an agent that is ready to be used on a regular basis. Alternatively, you could use existing agents in the chat to get various tasks done that aren’t recurring.

This is a general outline of the structure of the repo:

We can start with Layer 1, you can think of this as the entry point of the user. This is where the natural language command from the user is processed. The goal is to understand what the user is actually asking. We call this the intent. Essentially, once we abstract away all of the filler words and surface structure of the prompt, we want to determine what action is being requested. Understanding that the underlying intent is key because it allows us to figure out which integrations or tool calls are needed to execute the request. This initial processing, pulling out the intent, constraints (like filters, limits, or formats), and required integrations, is currently handled on the frontend, usually using an LLM.

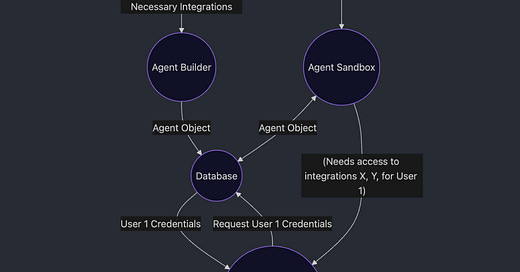

Once we have that structured intent, we move on to creating what we call the agent object. This is essentially a programmatic blueprint one that describes the tools the agent will need, what sequence of calls it should make, and what constraints it should follow during execution. It’s almost like assembling a miniature workflow engine specific to that one task. This agent object is saved to the database so that it can be reused later (for recurring agents) or pulled instantly if needed again.

The agent object itself doesn’t execute anything; it’s more like a plan. Execution happens in two major phases: credential handling and sandboxed runtime.

Once the agent is triggered, either by user action, scheduling, or chaining from another task, we pass it into the agent sandbox. This is an isolated environment (think: Docker container, VM, or ephemeral runtime) where we safely execute agent logic without risking global failures. The sandbox pulls the agent object from the database and checks what tools or APIs it needs to talk to. If those tools require authenticated access (say, a user’s Notion workspace or Airtable base), the sandbox queries the MCP Gateway Server.

The Gateway Server acts like a credential and routing brain. It checks if we already have the user’s credentials stored securely. If not, it initiates a request to get them, usually via OAuth or a vault-backed login flow. Once credentials are in place, the gateway then routes the agent’s tool calls to the correct internal integration modules. Each of these tools, called modules (like Integration X or Y), is defined in the repo and is responsible for making calls to an external API with the right formatting, token injection, retries, and response parsing.

This modular tool called design lets us build integrations once and reuse them across many agents. For example, our Airtable integration doesn’t care why it’s being called; it just takes in a schema and a payload and runs the call, logging the result.

To recap: the agent is created from a natural language prompt → converted into a structured object → stored in the DB → pulled and sandboxed during execution → matched with user credentials → and finally runs tool calls through the MCP server layer.

The cool part is that this all runs in a decoupled, modular way. You can swap out integrations, build new agents from chat, or even cascade agents together to do multi-step automation. It’s a powerful way to create distributed automation workflows from scratch, using language alone.

my technical queen 👸

It is fascinating Brooke. I am getting close to understanding it, a couple more efforts and I am going to try it out on some of my daily routine reports. You are amazing. Proud of you. Will be talking to you shortly. John M.